Dec 20 2018

Inspired by neurons, a research team, headed by the University of California San Diego, has devised a new hardware-software co-design method that holds potential for making neural network training faster and more energy efficient.

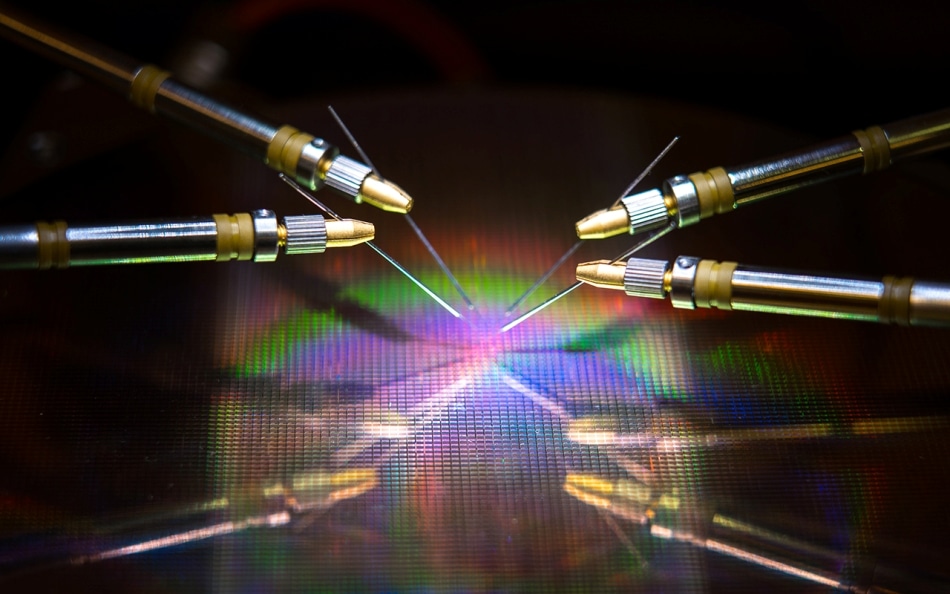

A UC San Diego-led team has developed hardware and algorithms that could cut energy use and time when training a neural network. (Image credit: David Baillot/UC San Diego Jacobs School of Engineering)

A UC San Diego-led team has developed hardware and algorithms that could cut energy use and time when training a neural network. (Image credit: David Baillot/UC San Diego Jacobs School of Engineering)

In the future, the study could make it viable to train neural networks on low-power devices like embedded devices, laptops, and smartphones. This latest breakthrough has been reported in a paper recently published in Nature Communications.

It takes plenty of time and computing power to train neural networks to execute a number of tasks like navigating self-driving cars, recognizing objects, or playing games. Massive computers with countless numbers of processors are usually needed to master these tasks, and added to this, training times can stretch from weeks to months.

This is because, to do these computations, data have to be transferred back and forth between two individual units—the processor and the memory—and this eats up most of the time and energy during the course of neural network training, stated Duygu Kuzum, senior author and professor of electrical and computer engineering at the Jacobs School of Engineering at UC San Diego.

In order to resolve this issue, Kuzum and her laboratory partnered with Adesto Technologies to create algorithms and hardware that enable these computations to be directly carried out in the memory unit, thereby removing the necessity to frequently shuffle data.

“We are tackling this problem from two ends—the device and the algorithms—to maximize energy efficiency during neural network training,” stated Yuhan Shi, first author and PhD student in Kuzum’s research group at UC San Diego.

The hardware component, in particular, is a highly energy-efficient type of non-volatile memory technology—that is, a 512-kb subquantum Conductive Bridging RAM (CBRAM) array. Compared to present leading memory technologies, it uses 10 to 100 times less energy. Based on Adesto’s CBRAM memory technology, the device has been mainly utilized as a digital storage device that simply has “1” and “0” states; however, Kuzum and her laboratory showed that it can also be programmed to exhibit numerous analog states to mimic biological synapses in the human brain. In-memory computing for neural network training can be performed using this so-called synaptic device.

On-chip memory in conventional processors is very limited, so they don’t have enough capacity to perform both computing and storage on the same chip. But in this approach, we have a high capacity memory array that can do computation related to neural network training in the memory without data transfer to an external processor. This will enable a lot of performance gains and reduce energy consumption during training.

Duygu Kuzum, Professor of Electrical and Computer Engineering, Jacobs School of Engineering, University of California San Diego.

Affiliated with the Center for Machine-Integrated Computing and Security at UC San Diego, Kuzum headed efforts to create algorithms that might be simply mapped onto this synaptic device array. The algorithms offered even more time and energy savings during the course of neural network training.

To implement unsupervised learning in the hardware, the method uses spiking neural network—a type of energy-efficient neural network. On top of that, Kuzum’s group applied another energy-saving algorithm known as “soft-pruning,” which was developed by them. This algorithm makes neural network training relatively more energy efficient without compromising much in terms of precision.

Energy-saving algorithms

Neural networks are a sequence of connected layers of artificial neurons, in which the output of a single layer sends the input to the next. The so-called “weights” represent the strength of the connections between these layers. Updating these weights is part of the neural network training.

Traditional neural networks tend to spend a great deal of energy to constantly update each of these weights. However, in the case of spiking neural networks, only weights that are connected to spiking neurons get updated. This implies fewer updates and thus translates to less computation power and time.

In addition, the network does the so-called unsupervised learning, implying that it can principally train itself. For instance, if a series of handwritten numerical digits are shown to the network, it will figure out how to differentiate between zeros, ones, twos, and so on. An advantage is that the network does not have to be trained on labeled instances—in other words, it does not have to be told that it is viewing a zero, one, or two—which is handy for autonomous applications such as navigation.

In order to make training more energy efficient and relatively faster, Kuzum’s lab created a novel algorithm that they termed “soft-pruning” to apply with the unsupervised spiking neural network. The soft-pruning technique finds weights that have already matured at the time of training and subsequently sets them to a continuous non-zero value. This prevents them from getting updated for the remainder part of the training, which reduces computing power.

Soft-pruning is different from traditional pruning techniques because this method is implemented during the course of the training and not after. Moreover, it can result in more improved accuracy when a neural network applies its training to the test. Generally, in pruning, unimportant or redundant weights are entirely removed.

However, one drawback is that if more weights are pruned, the network will perform less accurately at the time of testing. However, soft-pruning simply maintains these weights in a low energy setting, and hence, they are still around to assist the network to perform with much greater accuracy.

Hardware-software co-design to the test

The researchers implemented the soft-pruning algorithm and the neuro-inspired unsupervised spiking neural network on the subquantum CBRAM synaptic device array, and subsequently trained the network to define handwritten digits from the MNIST database.

During the tests, the network was able to classify digits with 93% accuracy even when around 75% of the weights were soft-pruned. In contrast, the network performed with less than 90% accuracy when only 40% of the weights were pruned through traditional pruning techniques.

With regards to energy savings, the researchers estimate that their neuro-inspired hardware-software co-design method can ultimately reduce energy consumption at the time of neural network training by two to three orders of magnitude when compared to the state of the art.

If we benchmark the new hardware to other similar memory technologies, we estimate our device can cut energy consumption 10 to 100 times, then our algorithm co-design cuts that by another 10. Overall, we can expect a gain of a hundred to a thousand fold in terms of energy consumption following our approach.

Duygu Kuzum, Professor of Electrical and Computer Engineering, Jacobs School of Engineering, University of California San Diego.

Moving ahead, Kuzum and her group intend to work with memory technology firms to further develop this study to the next stages. The ultimate objective of the team is to create an all-inclusive system in which neural networks could be trained in memory to do more complicated tasks with extremely low power and time budgets.