Drivers nowadays engage in an increasing number of tasks while driving, including the primary driving activity as well as interacting with the vehicle's entertainment system. The demand of drivers towards non-driving related tasks (NDRTs) is continuously increasing as a result of AD.

The primary driving function is performed by the automated vehicle (AV) in conditionally and fully automated driving. This opens up new opportunities to use AVs as mobile entertainment carriers and offices. The visual output is currently available via a variety of screens in the vehicle, including the dashboard, tablet, and head-up displays (HUDs).

WSDs expand HUDs to the full windscreen, allowing for significantly more viewing and interaction space. WSDs offer a novel potential for the automotive industry to reduce visual clutter in the instrument cluster and center stack as more drivers seek adaptive and individualized interfaces and devices.

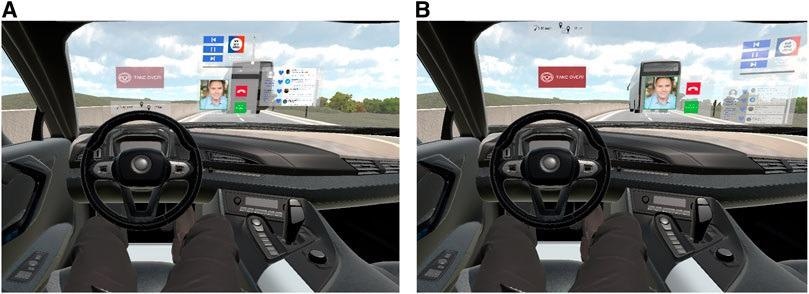

Condition M: Multiple floating windows displayed on a 3D WSD for SAE L3 AD. (A) Baseline, screen-fixed 2D WSD content proposed by Riegler et al. (2018) for SAE L3. (B) Example personalization using 3D WSD content for SAE L3. Image Credit: Riegler, A et al., Frontiers in Future Transportation 3

Although the potential of WSDs for AVs has been recognized, there are sparse reports available on the user preferences for content and layout customization in AR WSDs, as well as their impact on task and takeover performance.

About the Study

In the present study, the authors reported user research (n=24) wherein drivers/participants constructed their own personalized WSD layout and compared the benefits and drawbacks of having many content-specific windows vs. a single primary window for content presentation in a virtual reality driving simulator.

The AR WSDs were investigated and assessed in terms of user preferences, such as content type and layout for the society of automotive engineers level 3 (SAE L3) automated cars. Their impact on task and take-over performance was also illustrated.

The possible impact of tailored AR-supported windshield display UIs was examined based on an investigation of user preferences, tasks, and take-over performances. The visual properties and their implications on user experience, workload, task, and take-over performance were determined for 3D WSDs as windshield displays enable the driver/passengers to engage in NDRTs with a large AR interface in conditionally and fully automated vehicles.

Content window snapped to the back of the front vehicle (in this case: a bus). Image Credit: Riegler, A et al., Frontiers in Future Transportation 3

The analyses of variance (ANOVAs) with Bonferroni correction and a 5% significance criterion to assess differences in task and take-over performance between conditions M (multiple content-specific windows) and S (the placements and characteristics of a single-window used for all content types) were utilized.

The significance threshold was changed from α = 0.05 to the Bonferroni-corrected α′ where there were multiple comparisons. The Cronbach's α for multi-item self-rating scores was calculated, resulting in satisfactory reliability scores for all National Aeronautics and Space Administration raw task load index (NASA RTLX), technology acceptance model (TAM), and user experience questionnaire aspects (UEQ-S).

Observations

In this study, the researchers observed that the more peripheral windows were located at a greater distance from the driver and were inclined towards him. The individualized user interfaces diverged from baseline conceptions in numerous ways, such as location on the WSD, which was primarily controlled by the distance parameter. The TAM data showed that multi-window customization was more popular. However, the workload findings and subjective user experience showed no significant differences.

Following the experiment, post-experiment interviews revealed additional cases for both the WSD presentation styles such as multi-window setups having peripheral content windows for conditional automated driving with enhanced situational awareness.

It was also discovered that the user preferences differed depending on the context. Furthermore, one primary content window resulted in improved task performance and shorter take-over times, whereas the multi-window UI provided a superior subjective user experience. It was observed that the use of a single primary window that encompassed all media kinds considerably enhanced task performance for the comprehension of text. Also, single-window UIs (condition S) were found effective for focusing on specialized tasks, whereas multiwindow UIs (condition M) were found useful for lower-attention activities.

The study setting. The study participant is wearing the HTC Vive Pro Eye Virtual Reality headset, which includes speakers for audio output. The Logitech steering wheel and pedals are used for take-overs and manual driving. The microphone next to the steering wheel is used for speech input. The monitor is used by the study organizer to follow along. Image Credit: Riegler, A et al., Frontiers in Future Transportation 3

Conclusions

In conclusion, this study elucidates the advances in automated driving towards personalized 3D AR WSDs. The results imply that the drivers choose to engage in work/entertainment activities, possibly with other passengers, and vehicle-related or safety information becomes less important to the driver.

It was determined that the feedback design should take into account the driver's viewing direction for displaying notifications/warnings in a specific area of the WSD. According to the study, as vehicle automation advances, in conjunction with (opaque) WSD material, motion sickness should be addressed in highly automated cars.

Overall, the authors believe that the proposed “snap” technology will help automobile interface designers in improving the user experience in self-driving cars. The researchers also suggested the future implementation and evaluation of an interaction management system for multimodal user interactions with windshield displays based on the current findings of this study.

Disclaimer: The views expressed here are those of the author expressed in their private capacity and do not necessarily represent the views of AZoM.com Limited T/A AZoNetwork the owner and operator of this website. This disclaimer forms part of the Terms and conditions of use of this website.