Exploration of the solar system is gathering pace, with unmanned expeditions to Mars and the Moon seeking to discover details about our cosmic neighbors that will inform scientific progress and future crewed expeditions. To help missions navigate new worlds, a paper published in the journal Electronics has presented a deep reinforcement learning suite, MarsExplorer.

Study: MarsExplorer: Exploration of Unknown Terrains via Deep Reinforcement Learning and Procedurally Generated Environments. Image Credit: HelenField/Shutterstock.com

Exploring New Worlds

Scientists have been sending unmanned probes and rovers into the solar system for decades. With a broad number of purposes and targets, these unmanned vehicles have sent back a stunning array of scientific data.

Currently, different spaceships on or in orbit around Mars include Perseverance, Tianwen-1, and Hope. More missions are planned for Mars as well as several that will land on the lunar surface over the next few years. NASA also plans to return astronauts to the moon within the next decade.

Navigating Unknown Terrain

However, there is a critical issue for unmanned, semi-autonomous spaceships: navigating unknown and potentially hazardous terrain. Whilst a human operator can respond to unexpected terrain with their senses, an unmanned, robotic vehicle cannot rely on human operators, especially at such vast distances. They must make use of their programming and having the software to adapt to unknown terrain is essential.

The ability to explore and map previously unknown areas is key for missions using autonomous vehicles. It can impact time-critical mission parameters (such as fuel depletion) and, ultimately, can be the difference between the success and failure of the mission itself. Deep Reinforcement Learning is a field of computer science that can provide software-based solutions to this mission-critical challenge.

What is Deep Reinforcement Learning?

Deep Reinforcement Learning is a field in machine learning that combines the principles of deep learning and reinforcement learning. Reinforcement learning helps computers learn by trial and error, and deep learning helps them to make decisions using unstructured input data without the need for manual input and engineering.

Recent advances in hardware and algorithms have accelerated the abilities of reinforcement learning software, giving it levels of performance equal to or better than human performance in tasks such as the game Go, robotic manipulation, and multi-agent collaboration.

A milestone in reinforcement learning was reached with the development of a framework that standardizes common problems, namely open-AI-gym. This has led to new generations of reinforcement learning frameworks that can be tuned to tackle open-AI-gym setups.

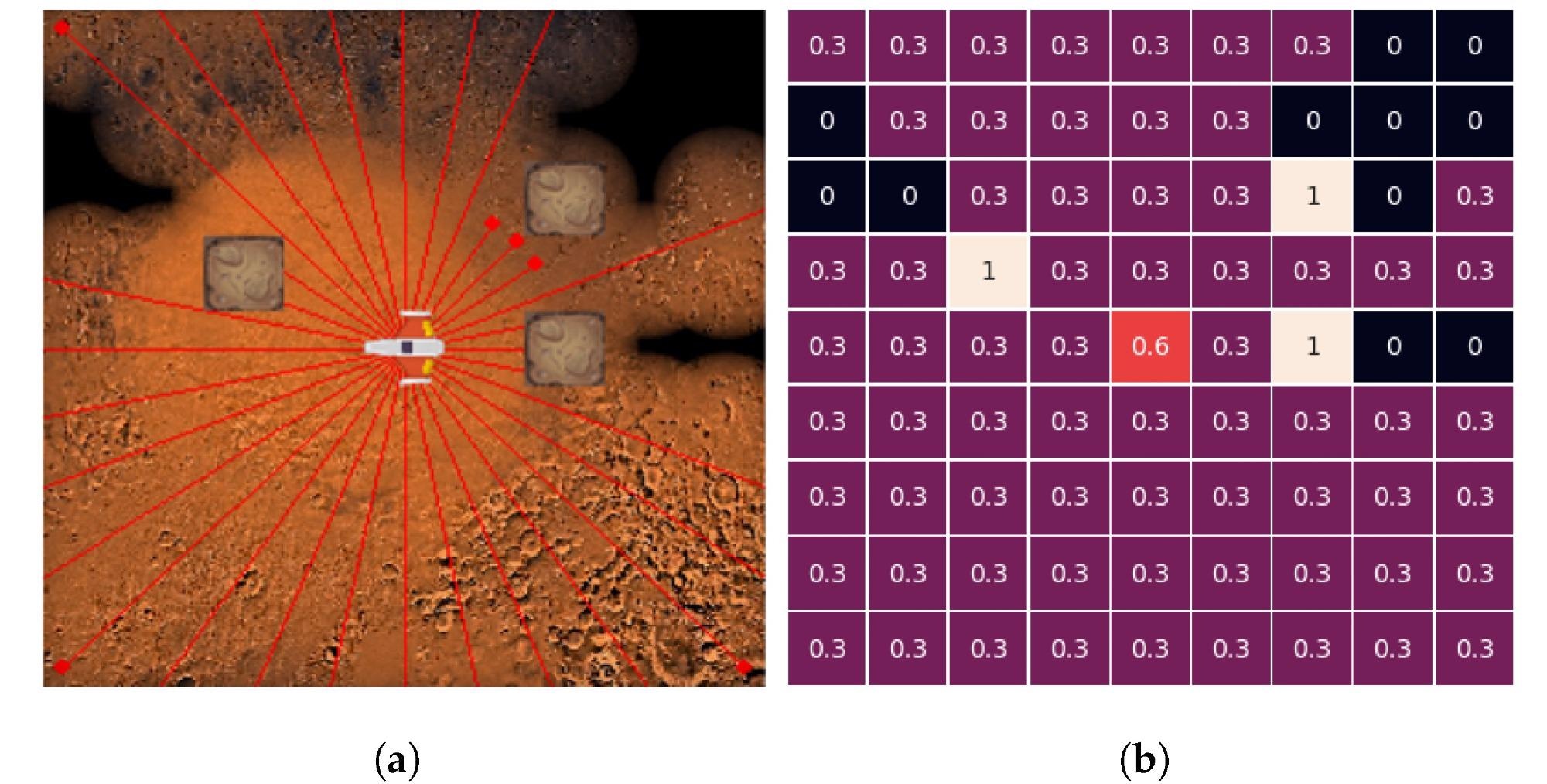

State encoding convention. (a) Graphical environment; (b) State s(t) representation. Image Credit: Koutras, D et al., Electronics

State encoding convention. (a) Graphical environment; (b) State s(t) representation. Image Credit: Koutras, D et al., Electronics

MarsExplorer: Using Deep Reinforcement Learning to Explore Unfamiliar Extraterrestrial Terrains

Breakthroughs in deep reinforcement learning led to their application for path planning and exploration tasks for robots. Because rovers and other unmanned spacecraft need to be able to operate autonomously and navigate through previously unmapped extraterrestrial areas, frameworks for deep reinforcement learning are being developed and explored for this purpose.

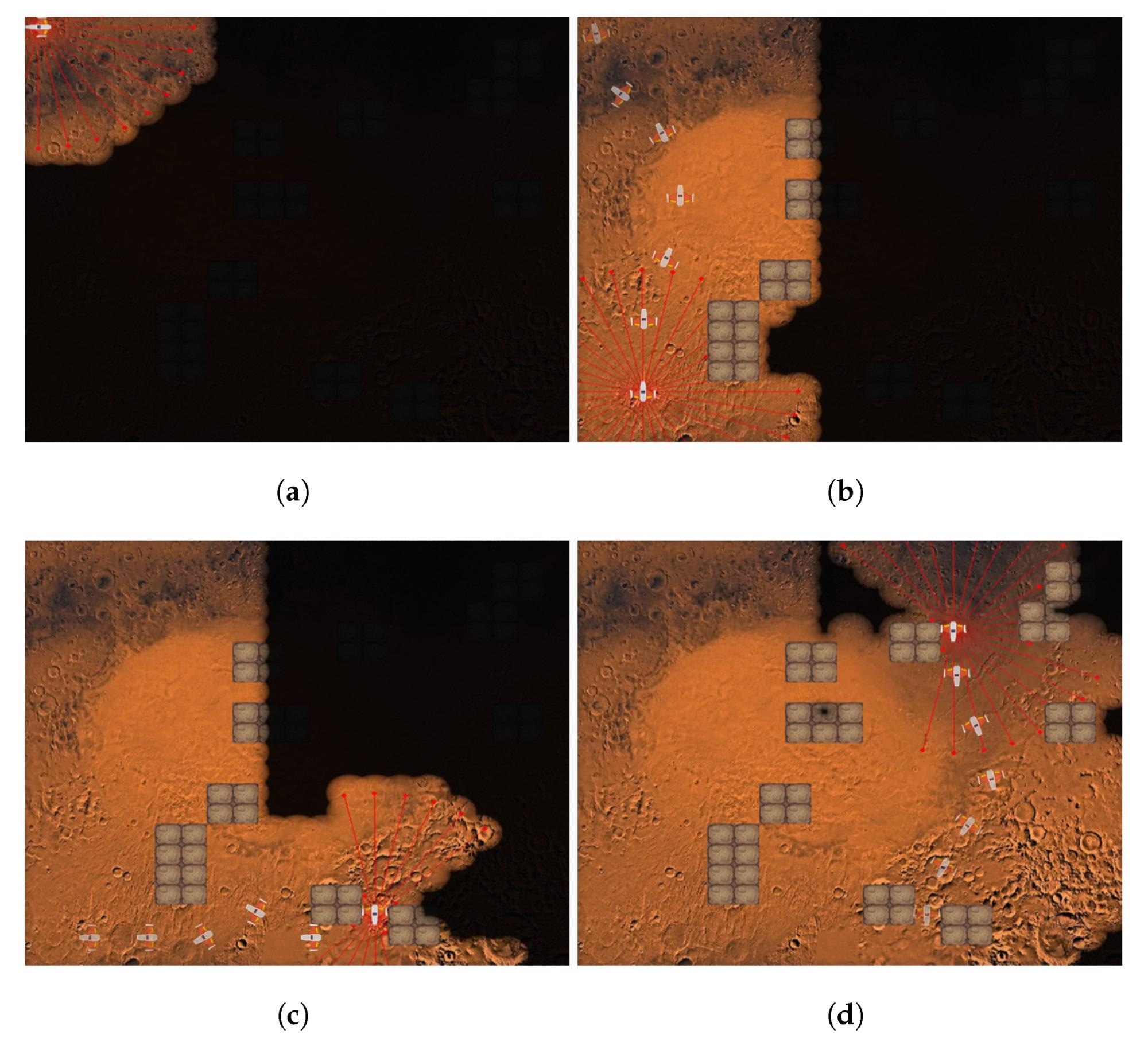

MarsExplorer is a deep reinforcement learning suite that has been developed by a team of Greek scientists. The software trains robots with procedurally generated environments that possess various terrain diversities. These allow robots to grasp and understand the underlining structure of terrains and apply this to areas it has never explored. Robots are trained multiple times in the MarsExplorer environment and are given strong generalization abilities.

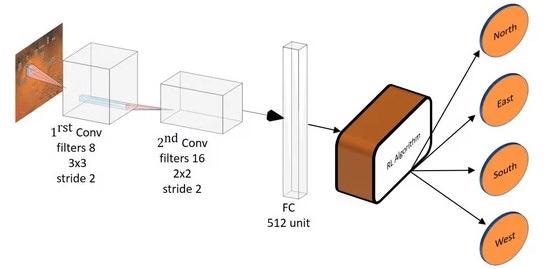

In the MarsExplorer environment, real-world robotic platforms can use learned policies. The team has evaluated four state-of-the-art reinforcement learning algorithms – Ranbow, PPO, SAC, and A3C – with the system. Human performance within the environment was used to further evaluate the performance of the algorithms.

The best performing algorithm was found to be PPO, and a follow-up evaluation of this algorithm was conducted with varying degrees of difficulty. Additionally, a scalability study was carried out alongside a comparison with non-learning methodologies.

The objective of the research was not to create another realistic simulator but to provide a framework for reinforcement learning methods as well as non-learning methods. This will be used to provide a benchmark for exploration and coverage tasks. In reality, the execution time of different algorithms impacts their suitability for tasks.

Overview of the experimental architecture. Image Credit: Koutras, D et al., Electronics

The Future

There are multiple challenges facing robotic explorers on other planetary bodies. According to the study, MarsExplorer is the first open-AI compatible reinforcement learning framework that is optimized for the exploration of unknown terrain. MarsExplorer is a deep-learning system that helps to solve this issue.

Commonly used, off-the-shelf algorithms can be used in the system, providing a software package for exploration of extraterrestrial environments without the need to tune the system to the individual dynamics of the robot it is installed in. This will help future unmanned missions to explore the solar system by giving robotic missions levels of autonomy that are difficult to achieve using conventional software and hardware.

Further Reading

Koutras, D et al. (2021) MarsExplorer: Exploration of Unknown Terrains via Deep Reinforcement Learning and Procedurally Generated Environments [online] Electronics 10:22 | mdpi.com. Available at: https://www.mdpi.com/2079-9292/10/22/2751/htm

Disclaimer: The views expressed here are those of the author expressed in their private capacity and do not necessarily represent the views of AZoM.com Limited T/A AZoNetwork the owner and operator of this website. This disclaimer forms part of the Terms and conditions of use of this website.