Sep 25 2018

From time to time, the hunt for functional materials — materials engineered with properties and features that allow it to do something remarkable — delivers a significant change to the way we live. About 5000 years ago, the discovery and use of bronze allowed no less a task than ushering in modern civilization. Of late, silicon's properties have given rise to the Information Age, and the advent of products we presently take for granted: plastic, composites, stainless steel, Gorilla Glass, titanium, even clothing that will not stain.

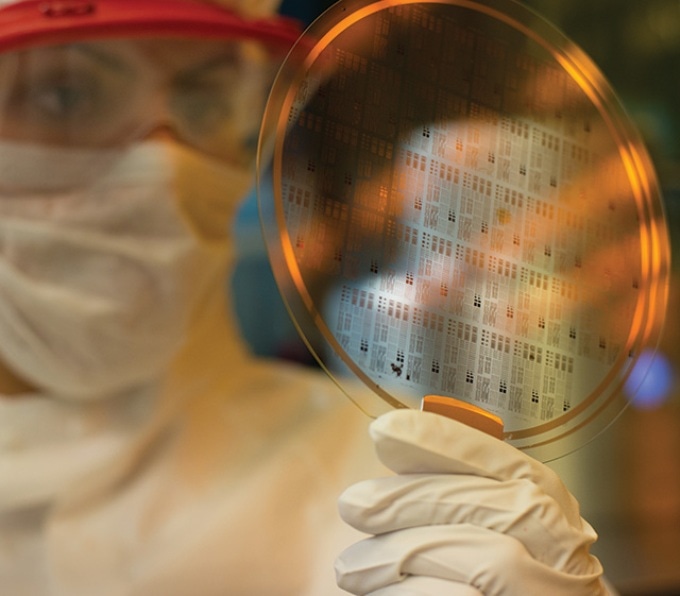

With support from the National Science Foundation's TRIPODS+X program, Lehigh University's Josh Agar is partnering with the University of California at Berkeley to increase the speed of materials discovery and development, with implications in fields including electronics, healthcare, and energy systems. (Photo courtesy Lehigh University)

With support from the National Science Foundation's TRIPODS+X program, Lehigh University's Josh Agar is partnering with the University of California at Berkeley to increase the speed of materials discovery and development, with implications in fields including electronics, healthcare, and energy systems. (Photo courtesy Lehigh University)

However discovering new materials is a drawn-out process, and Joshua Agar, an assistant professor of materials science and engineering at Lehigh University, says it comes about in one of three elementary ways.

"Throughout human history," he says, "we've seen materials development occur over thousands of years of trial and error that advances across generations and entire civilizations. Efficiencies began to improve dramatically when society began to conduct physical experiments in increasingly-specialized laboratories. This is certainly an improvement, but this approach comes with significant costs as we conduct time-consuming, expensive experiments. Even more recently, though, scientists have begun to use computational simulation to drive research in a wide array of fields, including material discovery. This use of digital technologies is far more efficient than either of the previous methods—yet even here, we can do better."

Boosting the speed of material discovery is but one goal of an extensive new project being conducted by a research team from Lehigh and University of California at Berkeley, assembled with about $600,000 in aid from the National Science Foundation (NSF) through its newly-announced TRIPODS+X program.

Recent advances in what are called embarrassingly parallel computational methods have enabled machine learning that can draw conclusions from raw data by considering an interlocking multitude of co-dependencies that would otherwise be beyond human comprehension," Agar continues. "Currently, in order to develop an understanding of these deep, nuanced relationships in the data, we utilize computational techniques that amount to brute-force computation to perform a logical chain of highly efficient functions. It's like using a flamethrower to crisp up a crème brûlée.

Joshua Agar, Assistant Professor, Materials Science and Engineering, Lehigh University

During the course of the project, Agar and his team will develop what they term "an efficient Bayesian-guided computational framework" that will guide the development of a neural network—a computer system designed based on the human brain and nervous system—that will serve to turbo-charge the hunt for new and advanced materials with improved electrical, mechanical, thermal, and magnetic features.

According to Agar, the project will apply "deep learning neural networks" to more efficiently perform operations linked with traditional physics-based computational simulation. The team plans for its work to ultimately hasten the work of other scientists in the discovery and synthesis of original materials; if successful, they also believe the technologies they are creating could have an impact much further than materials development.

"We believe that the increased efficiency of our neural network model may facilitate the asking of scientific questions that are currently computationally intractable," he says. "The proposed work is specifically focused on enabling a neural network to discover what we call strain-induced polar phases and phase competition in materials. But working with our partners at Berkeley, we believe that some of the foundational data science methods developed through this effort may also help to support astronomy's understanding of the large-scale structure of the universe—cosmology in its grandest sense. It is even possible that these concepts will prove to be applicable to computational simulations across a wide range of scientific disciplines and adjacent fields."

These application areas have little to do with the work conducted in my lab, arranging atoms into crystals and pulling on them to perform various tasks. But the underlying data science is, in fact, directly applicable. It certainly demonstrates how fundamentally valuable research in data and computational methods can prove to be across a host of domains, and thus supports the notion of clustering researchers with different perspectives around similar problems.

Joshua Agar

In this manner, Agar trusts the TRIPODS+X program is an ideal fit for his research focus and Lehigh's strategic directions more extensively.

"I'm proud our lab serves in some ways as an intersection point for Lehigh's new Institute for Functional Materials and Devices, the Institute for Data, Intelligent Systems and Computation, and the Nano Human Interfaces Initiative," he says. "Across our campus, Lehigh is showing commitment to efforts in team research at the intersection of disciplines. The NSF's TRIPOD+X initiative, in supporting projects like these, demonstrates broader belief in this approach as well."

Earlier in September, the NSF stated the awarding of $8.5 million in TRIPODS+X grants to expand the range of the cross-disciplinary TRIPODS institutes into wider areas of engineering, science, and mathematics. In total, NSF will assist 19 collaborative projects at 23 universities. The supported teams will bring new viewpoints to bear on complex and entrenched data science issues.

"The multidisciplinary approach for addressing the increasing volume and complexity of data enabled through the TRIPODS+X projects will have a profound impact on the field of data science and its use," said Jim Kurose, assistant director for Computer and Information Science and Engineering at NSF, in the organization's September 11th, announcement. "This impact will be sure to grow as data continues to drive scientific discovery and innovation."